Some projects just go wrong.

It’s a fact of life. Projects go over budget, blow their schedules, squander their resources. Sometimes they go off the rails so spectacularly that there’s nothing you can do except (literally) pick up the pieces and try to learn whatever lessons you can so you don’t repeat the failure in the future.

Last week I got a phone call from a developer who was looking for some advice about exactly that. He’s being brought in to repair the damage from a disastrous software project. Apparently the project completely failed to deliver. I wasn’t 100% clear on the details—neither was he, since he’s just being brought in now—but it sounded like the final product was so utterly unusable that the company was simply scrapping the whole thing and starting over. This particular developer knows a lot about project management, and even teaches a project management course for other developers in his company. He’d heard me do a talk about project failure, and wanted to know if I had any advice, and maybe a postmortem report template or a lessons learned template.

I definitely had some advice for him, and I wanted to share it with you. Postmortem reports (reports you put together at the end of the project after taking stock of what went right and wrong) are an enormously valuable tool for any software team.

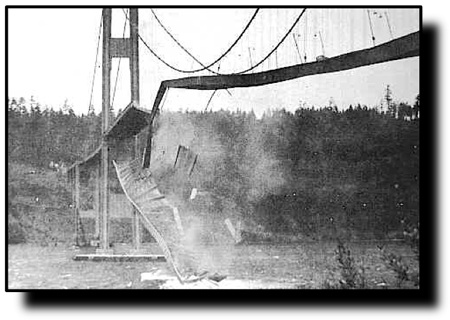

But first, let’s take a minute to talk about a bridge in the Pacific Northwest.

The tragic tale of Galloping Gertie

One of my favorite failed project case studies is Galloping Gertie, which was the nickname that nearby residents gave to the Tacoma Narrows Bridge. Jenny and I talk about it in our “Why Projects Fail” talk because it’s a great project failure example—and not just because it failed so spectacularly. It’s because the root causes for this particular project failure should sound really familiar to a lot of project managers, and especially to people who build software.

The Tacoma Narrows Bridge opened to the public on July 1, 1940. This photo was taken on November 7 of the same year:

While there were no human casualties, despite heroic attempts at a rescue the bridge disaster claimed the life of a cocker spaniel named Tubby.

Jenny and I showed a video of the bridge collapsing during a presentation of our “Why Projects Fail” talk a while back in Boston. After the talk, a woman came up to us and introduced herself as a civil engineer. She gave us a detailed explanation of the structural problems in the bridge. Apparently it’s one of the classic civil engineering project failure case studies: there were aerodynamic problems, and there were structural problems due to the size of the supports, and there were other problems that combined to cause a distinctive resonance which gave the bridge its distinctive “gallop.”

But one of the most important lessons we took away from the bridge collapse isn’t technical. It has to do with the designer.

[A]ccording to Eldridge, “eastern consulting engineers” petitioned the PWA and the Reconstruction Finance Corporation (RFC) to build the bridge for less, about which Eldridge meant the renowned New York bridge engineer Leon Moisseiff, designer and consultant engineer of the Golden Gate Bridge. Moisseiff proposed shallower supports—girders 8 feet (2.4 m) deep. His approach meant a slimmer, more elegant design and reduced construction costs compared to the Highway Department design. Moisseiff’s design won out, inasmuch as the other proposal was considered to be too expensive. On June 23, 1938, the PWA approved nearly $6 million for the Tacoma Narrows Bridge. Another $1.6 million was to be collected from tolls to cover the total $8 million cost.

(Source: Wikipedia)

Think back over your own career for a minute. Have you ever seen someone making a stupid, possibly even disastrous decision? Did you warn people around you about it until you were blue in the face, only to be ignored? Did your warnings turn out to be exactly true?

Well, from what I’ve read, that’s exactly what happened to Galloping Gertie. There was plenty of warning from many people in the civil engineering community who didn’t think this design would work. But these warnings were dismissed. After all, this was designed by the guy who designed the Golden Gate Bridge! With credentials like that, how could he possibly be wrong? And who are you, without those credentials, to question him? The pointy-haired bosses and bean counters won out. Predictably, their victory was temporary.

Incidentally, some people refer to this as one kind of halo effect: a person’s past accomplishments give others undue confidence in his performance at a different job, whether or not he’s actually doing it well. It’s a nasty little problem, and it’s a really common root cause of project failure, especially on software projects. I’ve lost count of the number of times I’ve encountered really terrible source code written by a programmer who’s been referred to by his coworkers as a “superstar.” Every time it happens, I think of the Tacoma Narrows Bridge.

But there’s a bigger lesson to learn from the disaster. When you look at the various root causes—problematic design, cocky designer, improper materials—one thing is pretty clear. The Tacoma Narrows Bridge was a failure before the first yard of concrete was poured. Failure was designed into the blueprints and materials, and even the most perfect construction would fail if it used them.

Learning from project failures

This leads me back back to the original question I was asked by that developer: how do you take stock of a failed project? (Or any project, for that matter!)

If you want to gain valuable experience from investigating a project—especially a failed one—it’s really important that you write down the lessons you learned from it. That shouldn’t be a surprise. If you want to do better software project planning tomorrow, you need to document your lessons learned today. You can think of a postmortem report as a kind of “lessons learned report” that helps you document exactly what happened on the project so you can avoid making the same missteps in the future.

So how do we take stock of a project that went wrong? How do we find root causes? How do we come up with ways to prevent this kind of problem in the future?

The first step is talking to your stakeholders… all of them. As many as you can find. You need to find everyone who was affected by the project, anyone who may have an informed opinion, and figure out what they know. This can be a surprisingly difficult thing to do, especially when you’re looking back at your own project. If people were unhappy (and people often are, even when the final product was nearly perfect), they’ll give you an earful.

This makes your life more difficult, because it’s hard to be objective when someone’s leveling criticisms at you (especially if they’re right!). But if you want to get the best information, it’s really important not to get defensive. You never know who will give you really valuable feedback until you ask them, and it often comes from the most unexpected places. As developers, we have a habit of dismissing users and business people because they don’t understand all of the technical details of the work we do. But you might be surprised at how much your users actually understand about what went wrong—and even if they don’t, you’ll often find that listening to them today can help make them more friendly and willing to listen to you in the future.

Talking to people is really important, and having discussions is a great way to get people thinking about what went wrong. But most effective postmortem project reviews involve some sort of survey or checklist that lets you get written feedback from everyone involved in or affected by the project. Jenny and I have a section on building postmortem reports in our first book, Applied Software Project Management, that has a bunch of possible postmortem survey questions:

- Were the tasks divided well among the team?

- Were the right people assigned to each task?

- Were the reviews effective?

- Was each work product useful in the later phases of the project?

- Did the software meet the needs described in the vision and scope document?

- Did the stakeholders and users have enough input into the software?

- Were there too many changes?

- How complete is each feature?

- How useful is each feature?

- Have the users received the software?

- How is the user experience with the software?

- Are there usability or performance issues?

- Are there problems installing or configuring the software?

- Were the initial deadlines set for the project reasonable?

- How well was the overall project planned?

- Were there risks that could have been foreseen but were not planned for?

- Was the software produced in a timely manner?

- Was the software of sufficient quality?

- Do you have any suggestions for how we can improve for our next project?

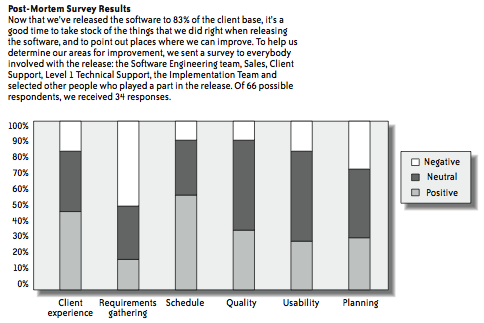

We definitely recommend using a survey where the questions are grouped together and each question is scored, so that you can start your postmortem report with an overview that shows the answers in a chart. (If you’re looking for a kind of “lessons learned template,” this is a really good start.)

The rest of the report delves into each individual section, pulling out specific (anonymous) answers that people wrote down or told you. Here’s an example:

Beta

Was the beta test effective in heading off problems before clients found them?

Score: 2.28 out of 5 (12 Negative [1 to 2], 13 Neutral [3], 9 Positive [4 to 5])

All of the comments we got about the beta were negative, and only 26% (9 of 34) of the survey respondents felt that the beta exceeded their expectations. The general perception was that many obvious defects were not caught in the beta. Suggestions for improvement included lengthening the beta, expanding it to more client sites, and ensuring that the software was used as if it were in production.

Individual comments:

- I feel like Versions 2.0 and 2.1 could have been in the beta field longer so that we might have discovered the accounting bugs before many of the clients did.

- We need to have a more in-depth beta test in the future. Had the duration of the beta been longer, we would have caught more problems and headed them off before they became critical situations at the client site.

- I think that a lot of problems that were encountered were found after the beta, during the actual start of the release. Shortly thereafter, things were ironed out.

- Overall, the release has gone well. I just feel that we missed something in the beta test, particularly the performance issues we are experiencing in our Denver and Chicago branches. In the future, we can expand the beta to more sites.

(Source: Applied Software Project Management, Stellman & Greene 2005)

There’s another approach to coming up with postmortem survey results that I think can be really useful. Jenny and I have spent the last few years learning a lot about the PMBOK® Guide, since that’s what the PMP exam is based on. If you’ve studied for the PMP exam, one thing you learned is that you need to document lessons learned throughout the entire project.

The exam takes this really seriously: you’ll actually see a lot of PMP exam questions about lessons learned, and understanding where lessons learned come from is really important for PMP exam preparation.

The PMBOK® Guide categorizes the activities on a project into knowledge areas. Since there are lessons learned in every area of the project, those categories (the knowledge area definitions) give you a useful way to approach them them:

- How well you executed the project and managed changes throughout (what the PMBOK® Guide calls “Integration Management”)

- The scope, both product scope (the features you built) and project scope (the work the team planned to do)

- How well you stayed within your schedule or if you had serious scheduling problems

- Whether or not budget was tight, and if that had an effect on the decisions made during the project

- What steps you took to ensure the quality of the software

- How you managed the people on the team

- Whether communication—especially with stakeholders—was effective

- How well risks were understood and managed throughout the project

- If you worked with consultants, whether the buyer-seller relationship had an impact on the project

For each of these areas, you should ask a few basic questions:

- How well did we plan? (Did we plan for this at all?)

- Were there any unexpected changes? How well did we handle them?

- Did the scope (or schedule, or staff, or our understanding of risks, etc.) look the same at the end of the project as it did at the beginning? If not, why not?

If you can get that information from your stakeholders and write it down in a way that’s meaningful and that you can come back to in the future, you’ll be in really good shape to learn the lessons you need to learn from any project. Even a failed one.