Non-functional requirements Q&A: We answer questions from readers about using nonfunctional requirements on a real software project, and how to use them on a real software project.

Non-functional requirements: Planning out how well your software will work

A couple of months ago I wrote a post called Using nonfunctional requirements to build better software. It’s basically a step-by-step guide for creating an easy, practical technique to use nonfunctional requirements on a real software project, treating them in a way that’s similar to how a lot of Agile teams treat user stories, scenarios and other functional requirements: by sticking them on index cards and using them to do some lightweight planning.

Since then, I’ve gotten a lot of questions about nonfunctional requirements (or, as some people call them, non functional requirements, behavioral requirements, quality attributes, and probably half a dozen other names). Based on the questions I’ve been getting, a lot of people really seem to want a solid overview of exactly what they are:

- What are nonfunctional requirements?

- What goes into a good nonfunctional requirement?

- Is there a nonfunctional requirements checklist that I can use?

- How do I write down a nonfunctional requirement? Is there a nonfunctional requirements template I can use?

Luckily, Jenny and I addressed exactly those questions in our first book, Applied Software Project Management, and I’ve gotten feedback over the years from people who read it (and other writing I’ve done about requirements), and tell me it helped them get a handle on the concepts behind nonfunctional requirements. So I’ll do a little requirements Q&A and address those questions one by one, drawing from the book where possible. And I’ve posted an O’Reilly Community blog post called Understanding nonfunctional requirements with some additional information, which should also help get to the bottom of the issue.

Q: What are non-functional requirements?

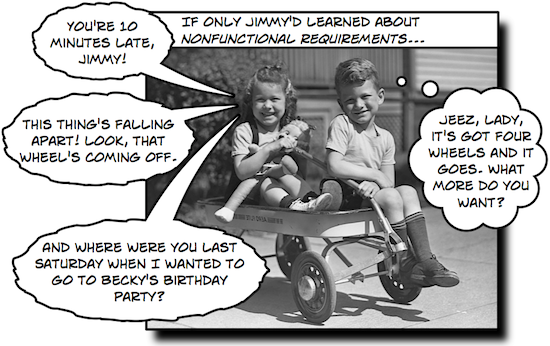

Non-functional requirements — or behavioral requirements, or quality attributes — describe how well a system performs its function. This is fundamentally different than the typical functional requirements that most of us are used to, which describe what that system does.

Here’s a quick example. Whenever I’m talking about requirements, I like to use a “search and replace” feature in a word processor or text editor as an example, because we’re all familiar with how it works. So while a functional requirement for “search and replace” might describe how the case-sensitive matching works: “If the original text was all uppercase, then the replacement text must be inserted in all uppercase.” A nonfunctional requirement, on the other hand, might describe the performance (“it must be able to replace 1000 search terms in a 3MB document in under 250ms on one of our standard test VMs running at 50% load”).

Q: What goes into a good nonfunctional requirement?

A good nonfunctional requirement is one that makes it clear to everyone on the project — including the user (or someone who really understands what the user needs) — exactly how the software has to perform. Remember, a good requirement (functional or nonfunctional) is about understanding and addressing the needs of a user.

Here’s what Jenny and I wrote about nonfunctional requirements in our first book:

Users have implicit expectations about how well the software will work. These characteristics include how easy the software is to use, how quickly it executes, how reliable it is, and how well it behaves when unexpected conditions arise. The nonfunctional requirements define these aspects about the system. — Applied Software Project Management, p113 (O’Reilly 2005)

It’s really easy for non functional requirements to be unclear or ambiguous. The best way to make sure a nonfunctional requirement is clear and easy to use is to quantify it. So instead of saying that a task must be done quickly, write down the maximum number of seconds it must take to perform a task. The maximum size of a database on disk, the number of hours per day a system must be available, and the number of concurrent users supported are examples of requirements that the software must implement but do not change its behavior.

Q: Is there a nonfunctional requirements checklist that I can use?

We put together a nonfunctional requirements checklist that I’ve used many times on real projects. It’s based on a list of nonfunctional requirements we included in Applied Software Project Management.

Here’s one thing to keep in mind about this (or any other) non functional requirements checklist: as you’re reading it, you’ll probably find yourself thinking, “Wait a minute, all my software needs to be flexible (or efficient, or robust, etc.).” Yes, that’s true, of course. But are there specific non-functional requirements that affect your project in particular, above and beyond what you do on every project? That’s what this checklist is for, and that’s what you should be thinking about when you write down nonfunctional requirements.

- Availability: Are their constraints on the system’s availability or uptime?

- Efficiency: Are there resources the system needs to be careful about monopolizing?

- Flexibility: Will the system need to be altered after deployment?

- Portability: How easy it is to move to another platform?

- Integrity: How sensitive is the project to data security, access, and privacy?

- Robustness: Do error conditions need to be handled gracefully?

- Scalability: Will the system need to handle a wide range of configuration sizes?

- Usability: Are there specific constraints on how the users will understand, learn and use the software?

If you’re interested in using this on a real project , you can read more about it in that O’Reilly Broadcast post about non-functional requirements: I post a relevant excerpt from Applied Software Project Management that goes into more detail about each of these.

Q: How do I write down a nonfunctional requirement? Is there a nonfunctional requirements template I can use?

Yes. We put together a software requirements specification template, and I’ve helped a lot of teams adopt it for their own projects over the years. When we put together our requirements templates, we put a lot of effort into streamlining them as much as possible. So this is a sort of “bare minimum” template for writing down use cases, functional requirements, and nonfunctional requirements.

Here’s an example of how we’d specify a functional requirement:

| Name | Nonfunctional requirement #7: Performance constraints for search-and-replace |

| Summary | The search-and-replace feature must perform a search quickly. |

| Rationale | If a search is not fast enough, users will avoid using the software. |

| Requirements | A case-insensitive search-and-replace performed on a 3MB document with twenty 30-character search terms to be replaced with a different 30-character search term must take under 500ms on one of our standard testing VMs at 50% CPU load. |

| References | See use case #8: Search |

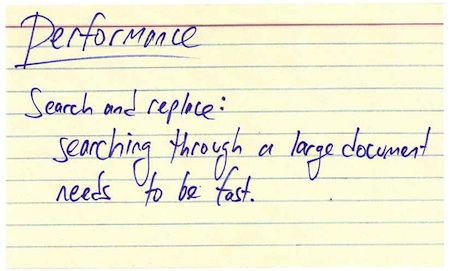

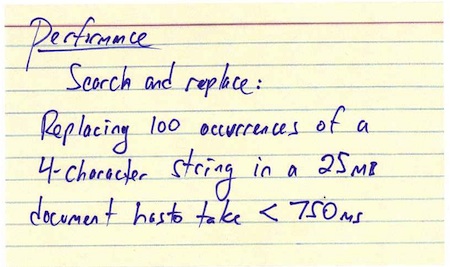

And that gets back to the blog post I mentioned earlier, Using nonfunctional requirements to build better software. If you’re working on a team that uses an agile process to build software, there’s a good chance that you already write down a lot of your requirements on index cards. In that post, I outline a method that I’ve had a lot of success with in my own projects.

I went into a lot more detail in that post, but here’s a quick recap. First, I write the requirement itself on the front of an index card:

and on the back I’ll write a specific test to make sure the requirement is implemented:

Then I use it for planning the project to make sure it actually gets included — you can see more about it in the post. As far as documenting nonfunctional requirements goes, that’s actually a really efficient way of doing it, and I’ve seen it work well on agile projects.

I hope that answers some of your questions about using nonfunctional requirements in software projects. Obviously, I’d be thrilled if you took a look at the requirements chapter in Applied Software Project Management — Jenny and I gave a lot of practical advice about how to use requirements on a software project. And I’ve got other requirements posts on Building Better Software as well. But if they don’t answer your questions, feel free to ask (or send them to us).