Yesterday, a Slashdot article asked an age-old question:

One of the worst problems [in my company] is a lack of process documentation. All knowledge is passed down via an oral tradition. Someone gets hit by a bus and that knowledge is lost forevermore. Now I know what I’ve seen in the past. There’s the big-binder-of-crap-no-one-reads method, usually used in conjunction with nobody-updates-this-crap-so-it’s-useless-anyway approach. I’ve been hearing good things about company wikis, and mixed reviews about Sharepoint and its intranet capabilities. And yes, I know that this is all a waste of time if there’s no follow-through from management. But assuming that the required support is there, how do you guys do process documentation?

This question seems to come up over and over again. The funny thing is that it almost always leads straight to a long discussion of the technique for gathering process documentation, and then a discussion of mechanism for storing it. That’s the question I think the reader thought he was asking: how to “copy down” the process by looking at how the people on the team build the software and putting it into a “complete” set of documents, and whether to use a wiki, Sharepoint, a version control system or some other repository to hold the docuemnts. And the discussion that followed from that Slashdot post should be pretty familiar to anyone who’s tried to solve this problem in real life. Some people talk about systems to store documents, others talk about the virtues of keeping them up to date, there’s a healthy dose of “write down what you’re currently doing” or suggestions for incident logging, an apparent epidemic of bus drivers who have it in for the one guy who knows how everything in the company works, and lots of talk of cataloging, updating and verifying.

I’ve been through that before, and I’ve bought into many of those ideas in the past. And you know what? It wasn’t particularly useful. I know that in many process circles, that’s a heretical idea. In fact, if you’d told me back in 1999 that it’s not useful, I would have laughed at you. Of course you’re supposed to start by documenting the complete process! How else do you know what you’re improving? There seems to be an unspoken rule that we’re supposed to be striving for a fully documented, constantly improving process. And in some shops, that does make sense. But it’s a very, very hard thing to do, and in practice it’s almost impossible to put in place from the ground up.

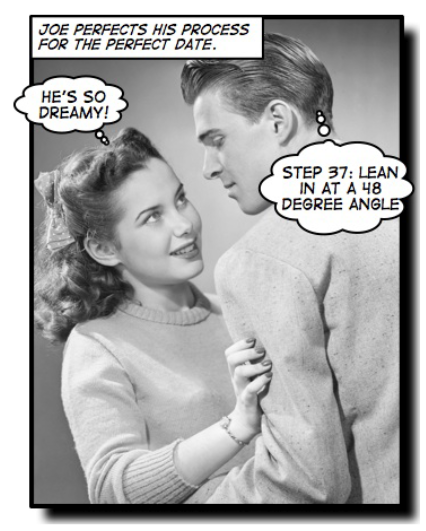

This is a really hard thing for a lot of software people to accept. Our nature, as programmer types, is to strive for complete systems. When we build software, we try to handle every possible special case. We’re overly pedantic and literal; it’s like exceptions and missing cases just get under our skin. So when we’re presented with the problem of how to improve the way a team or a company builds software, the first thing we want to do is come up with a system that describes the complete process for building software, mapping out every possible special case and exception that a project might come to. And it makes sense that we’d want to test that process to make sure it’s accurate, correct any changes, and put everything in a repository that gives us complete access to the one true way that we build software.

The problem is that people don’t really work that way. Process engineering suffers from a serious problem: it seems simple when you think about it in the abstract, but once you start trying to document precisely and completely how a team builds software, you run into an enormous number of special cases. Architecture is always finished and signed off before coding begins, right? Oh, wait, except for Joe’s project, where we did 30% of the architecture and started building the code for that while Louise and Bob worked on the next piece of architecture. Oh yeah, and then there’s that project that’s going to be broken into three phases, and we don’t really know how the third part is going to work.

I’ve seen that pattern many times, and it usually plays out in one of two ways. Either you end up with really general documentation that lays out a very general process that’s trivial to follow but doesn’t really provide any useful guidance (like a big chart that shows that testing comes after coding, which comes after design), or you end up with a tangled mess of special cases and alternative paths that seems to get updated every time there’s a new project. Both of those technically fulfill the goal of process documentation — which is great if your job was to document the process. But neither is particularly useful if your goal is to actually build better software.

There’s an easy solution to this problem: don’t document your software process. Or, at least, don’t start out by documenting the complete software process. Instead, take a step back and try to figure out what problems you’re facing. What about your process needs to be fixed? Do you have too many bugs? Do you deliver the software too late? Does your CFO complain that projects are too expensive? Do you deliver a build to your users, only to have them tell you that it looks fine and all, but wasn’t it supposed to do this other thing? Those are all different problems, and they have different solutions.

That’s what Jenny and I teach in our first book, Applied Software Project Management. We call it the “diagnose and fix” approach: first you figure out what problems are plaguing your projects, and then you put in very limited fixes that address the most painful problems that keep you from building better software. People don’t just wake up one day and say, “We’ve got to totally change the way we build software.” They don’t start documenting the software process because things are going just fine. People hate change, and they don’t start making changes to the way they build software unless they have a good reason. So look for that reason, find the pain that hurts the most, and make the smallest change that you can to fix that one problem without rocking the boat. Then find the next most painful thing, and put in the smallest change that you can to fix that. This is something you can keep doing indefinitely, in a way that doesn’t disrupt those parts of your projects that are working just fine. Because the odds are that there are plenty of things that the team is doing right! If it ain’t broke, don’t fix it.

So what about the question of how to actually document the process changes that you do want to make? That’s a very practical problem, and one that we had to handle in our book. After all, we do give you processes for planning, estimating, documenting, building and testing software. And we wanted to do it in a way that was programmer-friendly, with as little cognitive overhead as we possible.

We decided to use process scripts — that’s scripts like an actor reads, not scripts like a shell runs — to describe our processes. We developed these scripts based on use cases (which we talk about in detail in the book). If you take a look at the use case page from the book’s companion website, you can see an example of a use case, followed by a typical script that you’d follow to develop use cases for your project. That particular script is very iterative, because use case development (like many great software practices) should be a highly iterative process. We’ve got examples of many scripts for the various practices and processes: ones for planning projects, reviewing deliverables, and building and testing software.

As for storing these scripts, I’ve used all sorts of ways to do it in the past. I’ve used wikis, version control systems (both Subversion and VSS, depending on what’s in place at the company), even plain old folders full of MS Word documents. The actual mechanics of storing documents aren’t particularly interesting, and are pretty much interchangeable for process documentation. Processes shouldn’t change all that often, because change is very disruptive to a company. The changes should be small, incremental, and easily understood by the team… and the team should agree that they’re useful! Because the biggest problem with process changes — and several posts in the Slashdot thread bring this up — is that they don’t “stick”. But making those changes stick is easy. Just make small changes that the team buys into, and that actually get you to build better software.

That’s easier said than done, of course. Lucky for us, too! Otherwise there wouldn’t be a market for our books or training.