I was working on a project earlier today. Now, typically I always do test-driven development, where I’ll build unit tests that verify each class first and then build the code for the class after the tests are done. But once in a while, I’ll do a small, quick and dirty project, and I’ll think to myself, “Do I really need to write unit tests?” And then, as I start building it, it’s obvious: yes, I do. It always comes at a point where I’ve added one or two classes, and I realize that I have no idea if those classes actually work. I’ll realize that I’ve written a whole bunch of code, and I haven’t tested any of it. And that starts making me nervous. So I turn around and start writing unit tests for the classes I’ve written so far… and I always find bugs. This time was no exception.

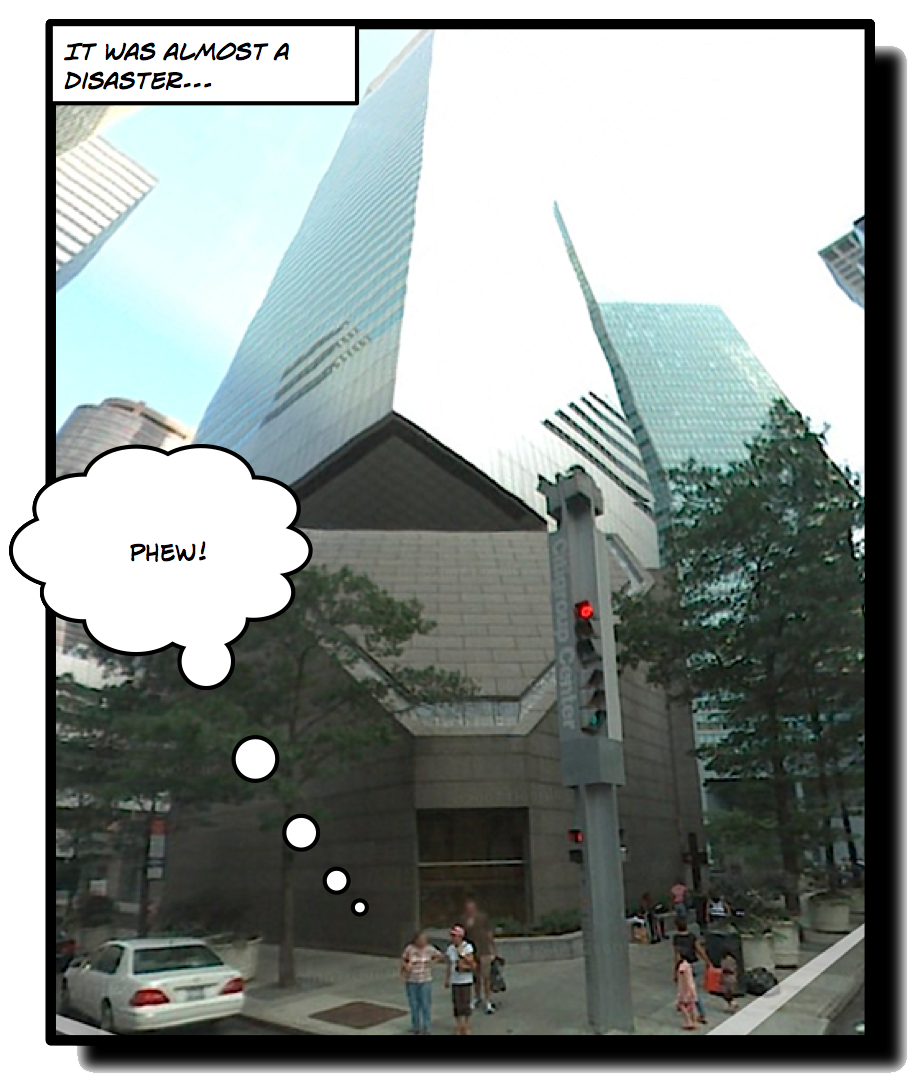

This time, for some reason, that Exercise reminded me of the story of the nearly disastrous Citicorp Center building.

Citicorp Center was one of the last skyscrapers built in the New York City skyscraper and housing boom in the 1960s and 1970s. A lot of New Yorkers today probably don’t realize that it was actually one of the more interesting feats of structural engineering at the time. The building was built on a site occupied by St. Peter’s, a turn-of-the-century Lutheran church that would have to be demolished to make way for the skyscraper. The church agreed to let Citigroup demolish it, on one condition: that it be rebuilt on the same site.

The engineer, Bill LeMessurier, came up with an ingenious plan: put the base of the building up on columns, and cantilever the edge of the building over the church. Take a look at it on Google Maps’ Street View — you can pan up, navigate around, and see just how much of a structural challenge this was.

The building was completed in 1977. A year later, LeMessurier got a call from an engineering student studying the Citicorp building. Joe Morgenstern’s excellent 1995 New Yorker article about the building describes it like this:

The student wondered about the columns–there are four–that held the building up. According to his professor, LeMessurier had put them in the wrong place.

“I was very nice to this young man,” LeMessurier recalls. “But I said, ‘Listen, I want you to tell your teacher that he doesn’t know what the hell he’s talking about, because he doesn’t know the problem that had to be solved.’ I promised to call back after my meeting and explain the whole thing.”

Unfortunately, LeMessurier was mistaken, and in the article he describes the problem in all its gory detail. It’s a fascinating story, and I definitely recommend reading it — it’s a great example of how engineering projects can go wrong. It’ll probably seem eerily familiar to most experienced developers: after a project is done, someone uncovers something that seems to be a tiny snag, which turns out to be disastrous and requires a huge amount of rework.

Rework in a building isn’t pretty. In this case, it required a team to go through and weld steel plates over hundreds of bolted joints throughout the building, all over the weekends so nobody would find out and panic.

But what I found especially interesting about the story had to do with testing the building:

On Tuesday morning, August 8th, the public-affairs department of Citibank, Citicorp’s chief subsidiary, put out the long delayed press release. In language as bland as a loan officer’s wardrobe, the three-paragraph document said unnamed “engineers who designed the building” had recommended that “certain of the connections in Citicorp Center’s wind bracing system be strengthened through additional welding.” The engineers, the press release added, “have assured us that there is no danger.” When DeFord expanded on the handout in interviews, he portrayed the bank as a corporate citizen of exemplary caution–“We wear both belts and suspenders here,” he told a reporter for the News–that had decided on the welds as soon as it learned of new data based on dynamic-wind tests conducted at the University of Western Ontario.

There was some truth in all this. During LeMessurier’s recent trip to Canada, one of Alan Davenport’s assistants had mentioned to him that probable wind velocities might be slightly higher, on a statistical basis, than predicted in 1973, during the original tests for Citicorp Center. At the time, LeMessurier viewed this piece of information as one more nail in the coffin of his career, but later, recognizing it as a blessing in disguise, he passed it on to Citicorp as the possible basis of a cover story for the press and for tenants in the building.

Tests were at the center of this whole situation. It turned out that insufficient testing was done at the beginning of the project. Now, more tests were used to figure out how to handle the situation. Tests got them into the situation, and tests got them out.

So what does this have to do with software?

I have a hunch that anyone who’s done a lot of test-driven development will see the relevance pretty quickly. The quality of your software — whether it does its job or fails dramatically — depends on the quality of your tests. It’s easy to think that you’ve done enough testing, but once in a while your tests uncover a serious problem that would be painful — even disastrous — to repair. And as LeMessurier found, it’s easy to run tests that give a false sense of security because they’re based on faulty assumptions.

I’ve had arguments many times over my career with various people about how much testing to do. I can’t say that I’ve always handled them perfectly, but I have found a tactic that works. I point to the software and ask which of the features doesn’t have to work properly. But it’s good to remind myself how easy it is to question the importance of tests. It’s so easy, in fact, that I did it myself earlier today. And that’s why it’s important to have examples like Citicorp Center to remind us of how important testing can be.